By Anthony Watts

While BEST is making a public relations train wreck for themselves by touting preliminary conclusions with no data analysis paper in place yet to support their announcements, there have been other things going on in the world of surface data integrity and uncertainty. This particular paper is something I have been assisting with (by providing metadata and images, plus being part of the review team) since May of last year. Regular WUWT readers may recognize the photo on the cover as being the USHCN station sited over a tombstone in Hanksville, UT photographed by volunteer Juan Slayton.

What is interesting about it is that the author is the president of a private company, WeatherSource who describes themselves as:

Weather Source is the premier provider of high quality historical and real-time digital weather information for 10,000’s of locations across the US and around the world.

What is unique about this company is that they provide weather data to high profile, high risk clients that use weather data in determining risk, processes, and likely outcomes. These clients turn to this company because they provide a superior product; surface data cleaned and deburred of many of the problems that exist in the NCDC data set.

I saw a presentation from them last year, and it was the first time we had ever met. It was quite some meeting, because for the first time, after seeing our station photographs, they had visual confirmation of the problems they surmised from examining data. It was a meeting of the minds, data and metadata.

Here’s what they say about their cleaned weather data:

Weather Source maintains a one-of-a-kind database of weather information built from a combination of multiple datasets merged into one comprehensive “super” database of weather information unlike anything else on the market today. By merging together numerous different datasets, voids in one dataset are often filled by one of the other datasets, resulting in database that has higher data densities and is more complete than any of the original datasets. In addition all candidate data are quality controlled and corrected where possible before being accepted into the Weather Source database. The end result is a “super” database that is more complete and more accurate than any other weather database in existence - period!

It is this “super” database that is at the core of all Weather Service products and services. You can rest assured knowing that the products and services obtained through Weather Source are the best available.

They say this about historical data:

Comprehensive and robust are the two words which best describe the Weather Source Historical Observations Database.

Comprehensive because our database is composed of base data from numerous sources. From these we create merged datasets that are more complete than any single source. This allows us to provide you with virtually any weather information you may need.

Robust because our system of data quality control and cleaning methods ensure that the data you need is accurate and of the highest quality.

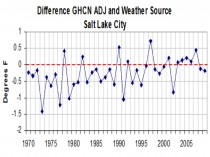

As an example, here is a difference comparison of their cleaned data set with a GHCN station:

The processes they use are not unlike that of BEST; the emphasis is on solving problems with the historical data gathered by the NOAA volunteer operated Cooperative Observer Network (COOP). The COOP network also has a smaller subset, the U.S. Historical Climatology Network (USHCN) which is a hand picked set of stations from researchers at the National Climatic Data Center (NCDC) based on station record length and moves:

USHCN stations were chosen using a number of criteria including length of record, percent of missing data, number of station moves and other station changes that may affect data homogeneity, and resulting network spatial coverage.

The problem is that until the surfacestations.org project came along, they never actually looked at the measurement environment of the stations, nor did they even bother to tell the volunteer operators of those stations that they were special, so that they would perform an extra measure of due diligence in data gathering and ensuring that the stations met the most basic of siting rules, such as the NOAA 100 foot rule:

Temperature sensor siting: The sensor should be mounted 5 feet +/- 1 foot above the ground. The ground over which the shelter [radiation] is located should be typical of the surrounding area. A level, open clearing is desirable so the thermometers are freely ventilated by air flow. Do not install the sensor on a steep slope or in a sheltered hollow unless it is typical of the area or unless data from that type of site are desired. When possible, the shelter should be no closer than four times the height of any obstruction (tree, fence, building, etc.). The sensor should be at least 100 feet from any paved or concrete surface.

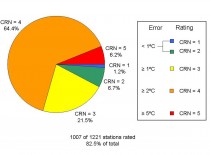

As many know and NOAA concedes in their own publications on the issue, about 1 in 10 weather stations in the USHCN meet the 100 foot rule. That is the basis of the criticisms I have been making for sometime - that siting has introduced unaccounted for biases in the data.

WeatherSource is a private company catering to business using a special data cleaning process that removes many, but not all of the data integrity issues and biases that have been noted. I’ve seen a detailed flowchart and input/output comparisons on their processes and have confidence that they are addressing issues in a way that provides a superior data set. However, being a business, they have to keep their advantage close, and I have agreed not to disclose their processes which are their legal intellectual property.

The data cleaning techniques of the BEST team made known to me when I met with them in February 2011 were similar, but they promised to use publicly available data and to make the process fully transparent and replicable, as shown on their web page.

So, knowing that BEST had independently identified surface data issues that needed attention, and because there was some overlap in the ideas pioneered by the privately held intellectual properties of WeatherSource with the open source promise of BEST, you can imagine my excitement at the prospect.

Unfortunately, on March 31st in full public view before Congress BEST fumbled the ball, and in football parlance there was a turnover to the opposing “team”. BEST released some potentially buggy and admittedly incomplete preliminary results (which I’ve seen the basis for) with no transparency or replicability whatsoever. BEST doesn’t even have a paper submitted yet.

However, the WeatherSource team has produced a paper, and they have it online today. While it has not peer reviewed by a journal, it has been reviewed by a number of professional people, and of course with this introduction on WUWT, is about to be reviewed worldwide in the largest review venue it could possibly get.

It should be noted, that the data analysis was done entirely by Mr. Gibbas of WeatherSource. My role was limited to providing metadata, photographs from our surfacestation project, and editing support.

Here’s the full paper.

See also Anthony’s response to Muller in this SPPI post.

by Bob Carter, Alan Moran & David Evans

Addressing the facts on climate change and energy

An internal strategy paper has been provided to Labor MPs for use in the promotion of the Government’s proposed new carbon dioxide tax. We offer critiques of the two most substantive parts of that paper, namely “Carbon Price” and “Climate Impact on Australia”. The full text of the paper is posted (pdf) here… Select analysis points are shown. Statements in bold italics are allegations (numbered by us) from the strategy paper; our responses are in ordinary typeface:

1a. We believe climate change is real...

Climate change is real and continuous. 20,000 years ago present day New York was under a kilometre of ice and lower sea levels meant that early Australians were able to walk to Tasmania; and just 300 years ago, during the “Little Ice Age” the world was again significantly colder than today.

Australians who witnessed the 2009 Victorian bushfires or this year’s Queensland floods and cyclones need no reminder that hazardous climate events and change are real. That is not the issue.

The issue is that use of the term “climate change” here is code for “dangerous global warming caused by human carbon dioxide emissions”. The relevant facts are:

(i) that mild warming of a few tenths of a degree of warming occurred in the late 20th century, but that so far this century global temperature has not risen; and

(ii) that no direct evidence, as opposed to speculative computer projections, exists to demonstrate that the late 20th century warming was dominantly, or even measurably, caused by human-sourced carbon dioxide emissions.

2. We want the top 1,000 biggest polluting companies to pay for each tonne of carbon (sic) pollution they produce.

Carbon dioxide is not a pollutant, but rather a natural and vital trace gas in Earth’s atmosphere, an environmental benefit without which our planetary ecosystems could not survive. Increasing carbon dioxide makes many plants grow faster and better, and helps to green the planet. If carbon dioxide were to drop to a third of current levels, most plant life on the planet, followed by animal life, would die.

As Ross Garnaut recognises, all businesses, including even the corner shop, are going to be paying for carbon dioxide emissions. In the long run, businesses must pass on the tax to their customers and ultimately the cost will fall on individual consumers.

A price on carbon dioxide will impose a deliberate financial penalty on all energy users. This will initially impact on the costs of all businesses, and energy-intensive industries in particular will lose international competitiveness.

The so-called “big polluters” are part of the bedrock of the Australian economy. Ultimately, any cost impost on them will either be passed on to consumers or will result in the disappearance of the activities, with accompanying direct and indirect employment.

3. A carbon price will provide incentives for the big polluters to reduce their carbon pollution.

All companies must pass on their costs to consumers, or go bankrupt. A price on carbon dioxide will encourage firms to reduce emissions, but their ability to do so is limited.

The owners of fossil fuelled electricity power stations that are unable to pass on the full cost of a carbon dioxide tax will see lower profits, and the stations with the highest emission levels will be forced to close prematurely. If investors expect the tax to be permanent, power stations with higher emissions will be replaced by power stations with lower emissions whose lower carbon dioxide taxes enable them to undercut the tax-enhanced cost of the established firms. The higher cost replacement generators will set a higher price for all electricity.

Alternatively, if investors lack confidence that the tax will be permanent, the new more expensive, lower emissions power stations may be perceived as too risky to attract investment. This will cause a progressive deterioration in the system’s ability to meet demand.

6. We will protect existing jobs while creating new clean energy jobs.

The whole point of a carbon dioxide tax is to force coal-fired power stations out of existence. No amount of subsidy will “protect” the jobs of the workers involved.

It has been shown that in Spain, 2.2 conventional jobs are destroyed for every new job created in the alternative energy industry, at a unit cost of about US$774,000/job. In a comparable UK study the figures were even worse, with the destruction of 3.7 conventional jobs for every 1 new job.

7. Every cent raised by the carbon (sic) price will go to households, protecting jobs in businesses in transition and investment in climate change programs. There will be generous assistance to households, families and pensioners (tax cuts are a live option).

As with any tax, the proceeds are returned to the community. One part of this, perhaps 20 per cent or so, is required to administer the program and is a deadweight loss. The rest is a redirection of funding to areas that the government considers to be more productive, or more politically supportive. The effect of this invariably leads to a loss of efficiency within the economy and to a slower growth rate.

It is also the case that introduction of a new tax generally results in unanticipated costs, which, because they are unknown, taxpayers cannot be compensated for.

CLIMATE IMPACT ON AUSTRALIA

8. We have to act now to avoid the devastating consequences of climate change.

Of itself carbon dioxide, even at concentrations tenfold those of the present, is not harmful to humans. Projections usually assume a doubling of emissions and associate this with an increase in global temperatures.

There is no “climate emergency”, and nor did devastating consequences result from the mild warming of the late 20th century. Global average temperature, which peaked in the strong El Nino year of 1998, still falls well within the bounds of natural climate variation. Current global temperatures are in no way unusually warm, or cold, in terms of Earth’s recent geological history.

9. If we don’t act then we will see more extreme weather events like bushfires and droughts, we will have more days of extreme heat, and we will see our coastline flooded as sea levels rise.

These alarmist statements are based exclusively on a naïve faith that computer models can make predictions about future climate states. That faith cannot be justified, as even the modelling practitioners themselves concede.

The computer models that have yielded the speculative projections quoted in the strategy paper are derived from organisations like CSIRO, which includes the following disclaimer at the front of all its computer modelling consultancy reports:

This report relates to climate change scenarios based on computer modelling. Models involve simplifications of the real processes that are not fully understood.

Accordingly, no responsibility will be accepted by CSIRO or the QLD government for the accuracy of forecasts or predictions inferred from this report or for any person’s interpretations, deductions, conclusions or actions in reliance on this report.

SUMMARY

The headline-seeking, adverse environmental outcomes that are highlighted in the strategy paper are therefore as inaccurate and exaggerated as were Hansen’s 1988 temperature projections.

There is no global warming crisis, and model-based alarmist projections of the type that permeate the strategy paper are individually and severally unsuitable for use in public policy making. Read the full summary.

By Christine Hall

Washington, D.C., April 1, 2011 - The scientific hypotheses underlying global warming alarmism are overwhelmingly contradicted by real-world data, and for that reason economic studies on the alleged benefits of controlling greenhouse gas emissions are baseless. That’s the finding of a new peer-reviewed report by a former EPA whistleblower.

Dr. Alan Carlin, now retired, was a career environmental economist at EPA when CEI broke the story of his negative report on the agency’s proposal to regulate greenhouse gases in June, 2009. Dr. Carlin’s supervisor had ordered him to keep quiet about the report and to stop working on global warming issues. EPA’s attempt to silence Dr. Carlin became a highly-publicized embarrassment to the agency, given Administrator Lisa Jackson’s supposed commitment to transparency.

Dr. Carlin’s new study, A Multidisciplinary, Science-Based Approach to the Economics of Climate Change, is published in the International Journal of Environmental Research and Public Health. It finds that fossil fuel use has little impact on atmospheric CO2 levels. Moreover, the claim that atmospheric CO2 has a strong positive feedback effect on temperature is contradicted on several grounds, ranging from low atmospheric sensitivity to volcanic eruptions, to the lack of ocean heating and the absence of a predicted tropical “hot spot.”

However, most economic analyses of greenhouse gas emission controls, such as those being imposed by EPA, have been conducted with no consideration of the questionable nature of the underlying science. For that reason, according to Dr. Carlin, the actual “economic benefits of reducing CO2 emissions may be about two orders of magnitude less” than what is claimed in those reports.

Sam Kazman, CEI General Counsel, stated, “One of the major criticisms of Dr. Carlin’s EPA report was that it was not peer-reviewed, even though peer-review was neither customary for internal agency assessments, nor was it possible due to the time constraints imposed on Dr. Carlin by the agency. For that reason, we are glad to see this expanded version of Dr. Carlin’s report now appear as a peer-reviewed study.”

Read the full report: A Multidisciplinary, Science-Based Approach to the Economics of Climate Change

See also the CEI OnPoint, ”Clearing the Air on the EPA’s False Regulatory Benefit-Cost Estimates and Its Anti-Carbon Agenda” by Garrett A. Vaughn